The Homeview Project

One of the most fundamental questions in psychology concerns the role of experience. What are the essential components of human experience? What are the malleable points in which small differences in experience can lead to different developmental outcomes? What are the mechanisms that underlie developmental change?

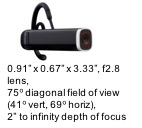

Answering all these questions requires that we know much more than we do about everyday experience. With these larger questions in mind, the Homeview project is collecting a large corpus of infant perspective scenes (using head cameras) and audio in the home as infants 1 to 24 months of age go about their daily life.

Watch our video. IU professors Linda Smith, David Crandall and Karin James talk about how IU Bloomington's first Emerging Areas of Research initiative, called "Learning: Machines, Brains, and Children," will revolutionize our understanding of how children, and robots, learn. The Homeview Project is a core part of this larger initiative. Learn more here.

This project is funded by the National Science Foundation, and in part by an Emerging Area of Research Award from Indiana University.Back to the top

Parent View

Parent View

Child View

Child View